Running a service in Docker

A great way to distribute and operate microservices are usually to run them in containers or even more interestingly, in clusters of compute nodes. Here follows an example of getting a tomodachi based service up and running in Docker.

We're building the service' container image using just two small files, the Dockerfile and the actual code for the microservice, example.py. In reality a service would probably not be quite this small (and it should use a dependency version lockfile to produce consistent builds), but as a template to get started this is sufficient to demonstrate basic requirements.

Dockerfile

DockerfileFROM python:3-10-bullseye

# For proper builds, instead use a list of frozen package versions

# using for example poetry lockfiles or a classic requirements.txt.

RUN pip install tomodachi

RUN mkdir /app

WORKDIR /app

COPY service.py .

ENV PYTHONUNBUFFERED=1

ENV TZ=UTC

CMD ["tomodachi", "run", "service.py", "--production"]example.py

example.pyimport json

import tomodachi

class Service(tomodachi.Service):

name = "example"

options = tomodachi.Options(

http=tomodachi.Options.HTTP(

port=80,

content_type="application/json; charset=utf-8",

),

)

_healthy = True

@tomodachi.http("GET", r"/")

async def index_endpoint(self, request):

# tomodachi.get_execution_context() can be used for

# debugging purposes or to add additional service context

# in logs or alerts.

execution_context = tomodachi.get_execution_context()

return json.dumps({

"data": "hello world!",

"execution_context": execution_context,

})

@tomodachi.http("GET", r"/health/?", ignore_logging=True)

async def health_check(self, request):

if self._healthy:

return 200, json.dumps({"status": "healthy"})

else:

return 503, json.dumps({"status": "not healthy"})

@tomodachi.http_error(status_code=400)

async def error_400(self, request):

return json.dumps({"error": "bad-request"})

@tomodachi.http_error(status_code=404)

async def error_404(self, request):

return json.dumps({"error": "not-found"})

@tomodachi.http_error(status_code=405)

async def error_405(self, request):

return json.dumps({"error": "method-not-allowed"})Building and running the container

example-service/ $ docker build . -t tomodachi-example-service

> Sending build context to Docker daemon 9.216kB

> Step 1/7 : FROM python:3.10-bullseye

> 3.8-slim: Pulling from library/python

> ...

> ---> 3f7f3ab065d4

> Step 7/7 : CMD ["tomodachi", "run", "service.py", "--production"]

> ---> Running in b8dfa9deb243

> Removing intermediate container b8dfa9deb243

> ---> 8f09a3614da3

> Successfully built 8f09a3614da3

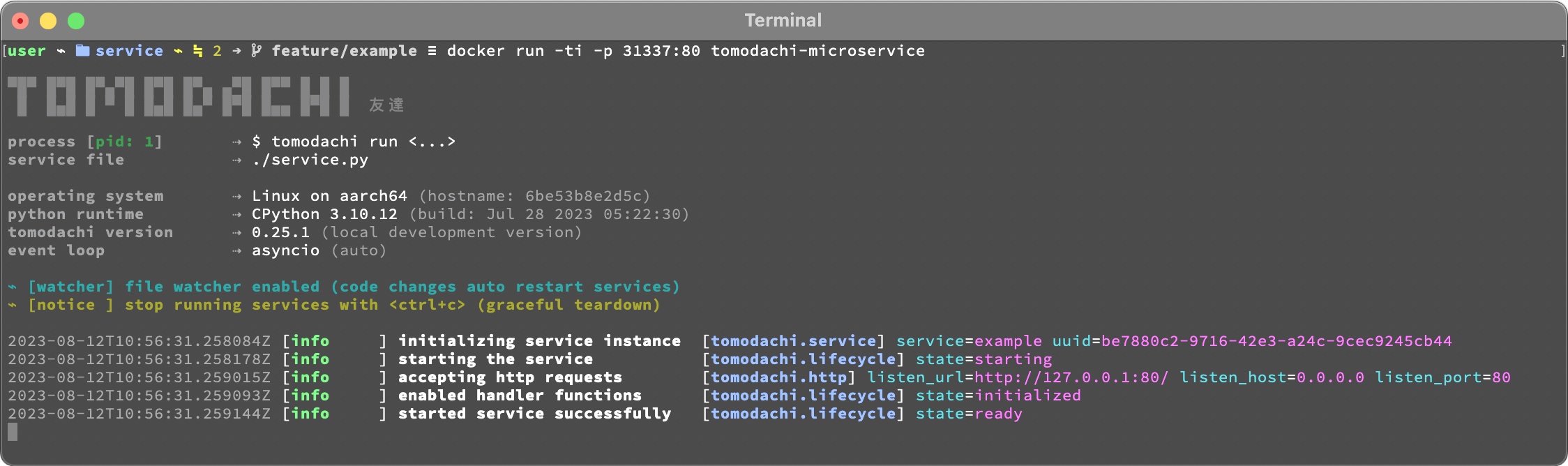

> Successfully tagged tomodachi-example-service:latestWe'll forward the host's port 31337 to port 80 so that we'll be able to request the running HTTP based service from our development machine.

example-service/ $ docker run -ti -p 31337:80 tomodachi-example-service

Making requests to the running service

~/ $ curl http://127.0.0.1:31337/ | jq

> {

> "data": "hello world!",

> "execution_context": {

> "tomodachi_version": "x.x.xx",

> "python_version": "3.x.x",

> "system_platform": "Linux",

> "process_id": 1,

> "init_timestamp": "2022-10-16T13:38:01.201509Z",

> "event_loop": "asyncio",

> "http_enabled": true,

> "http_current_tasks": 1,

> "http_total_tasks": 1,

> "aiohttp_version": "x.x.xx"

> }

> }~/ $ curl http://127.0.0.1:31337/health -i

> HTTP/1.1 200 OK

> Content-Type: application/json; charset=utf-8

> Server: tomodachi

> Content-Length: 21

> Date: Sun, 16 Oct 2022 13:40:44 GMT

>

> {"status": "healthy"}~/ $ curl http://127.0.0.1:31337/no-route -i

> HTTP/1.1 404 Not Found

> Content-Type: application/json; charset=utf-8

> Server: tomodachi

> Content-Length: 22

> Date: Sun, 16 Oct 2022 13:41:18 GMT

>

> {"error": "not-found"}It's actually as easy as that to get something spinning. The hard part comes next – which usually boils down to actually having to figure out (or decide) what to build, how to design an API, even naming the service…

Since

tomodachiprimarily was built for use in containers, you'll probably want to orchestrate your services using Kubernetes.

Other popular ways of running microservices are of course to use them as serverless functions, with an ability of scaling to zero (Lambda, Cloud Functions, Knative, etc. may come to mind). Currently

tomodachiworks best in a container setup and until a proper and performant serverless supported execution context is available built-in to tomodachi, it's advised to look at other solutions for those kinds of deployments and workloads.

Updated 7 months ago